The limitations of being human got you down? There’s a robot for that

Humans, you might have noticed, aren’t perfect. We crash cars. We injure ourselves performing hazardous labor like mining and construction. And we fare poorly in extreme environments we’d like to explore – like the deep ocean or the vacuum of space.

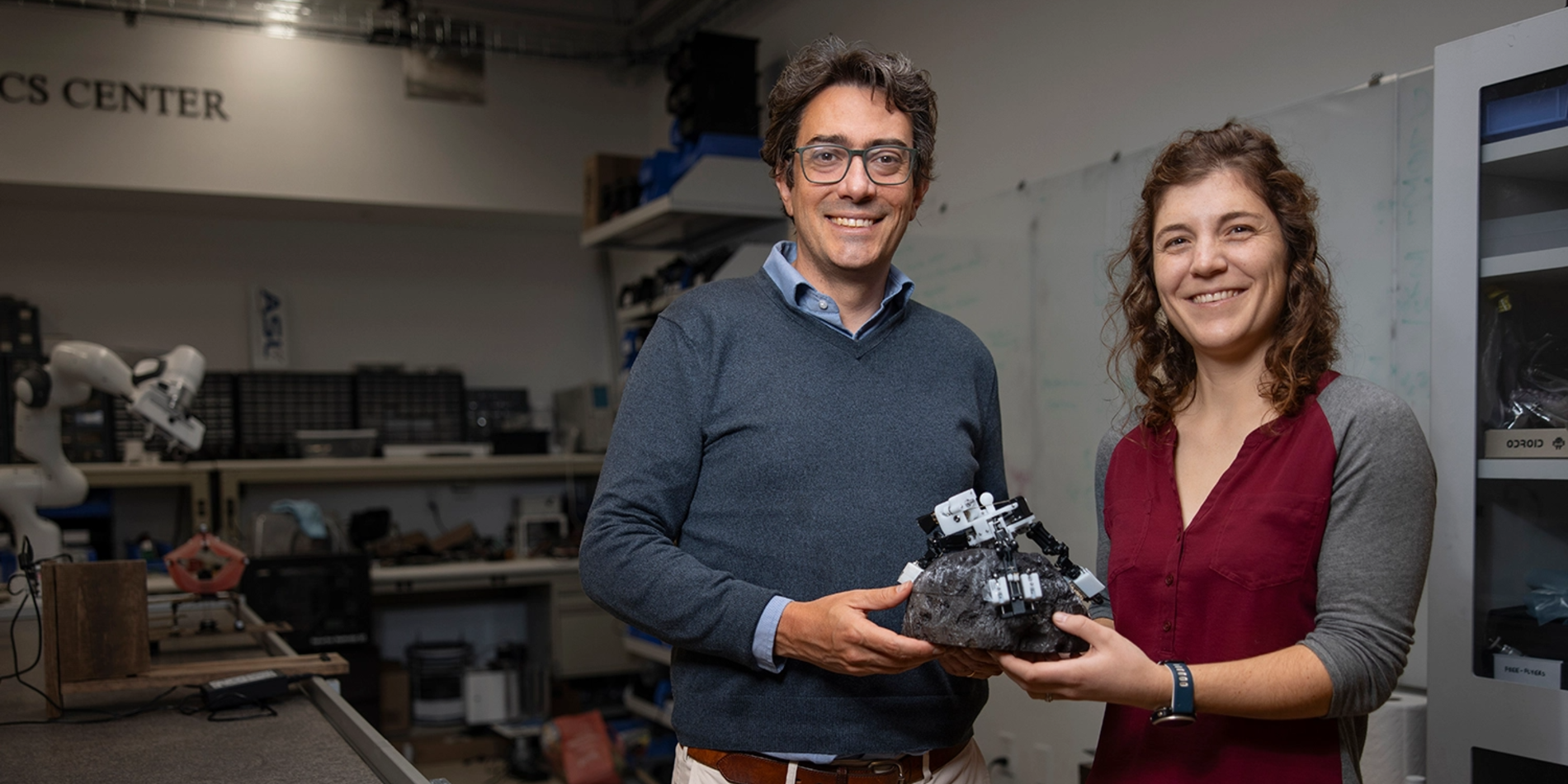

But Marco Pavone, associate professor of aeronautics and astronautics, and his students in the Autonomous Systems Lab (ASL) hope that emerging technologies like self-driving cars and space robots could one day help us get around these shortcomings.

“It takes a lot of effort and equipment to keep people alive in space,” said Stephanie Newdick, a PhD student in Pavone’s lab. And we’re not exactly indestructible on Earth, either. Sometimes, she said, “It just makes more sense to send a robot.”

An ideal self-driving car, for instance, should make fewer mistakes than a human driver, allowing passengers to reach their destinations more efficiently and lowering the risk of an accident. And autonomous space robots could repair satellites or explore Martian landscapes – saving astronauts from life-threatening tasks while also pushing the boundaries of space exploration.

An endless stream of decisions

Achieving that level of competence for a space robot or self-driving car, however, is easier said than done. Autonomous systems like these must be able to make decisions and then translate them into physical actions in unpredictable – or completely alien – environments.

The ASL tackles both issues, starting with designing decision-making algorithms that treat any scenario as a cost-benefit optimization problem. A self-driving car, for instance, must calculate which series of actions allow it to cross an intersection fastest while avoiding pedestrians and not jostling passengers – all in a few milliseconds. In addition to following fundamentals of good driving, such as staying within lines and abiding by traffic signals, this also involves anticipating the reactions of people in its environment.

“If the robot does something, how might a nearby human respond?” Pavone said. “And based on that, how does the robot make its next decision?”

Decision-making models also have to navigate the mishmash of cues a car might encounter – recognizing, for instance, that a stop sign printed on a billboard does not mean “stop.” Pavone’s lab is using large language models to design systems that can account for context in scenarios like this. Ideally, Pavone said, those systems will be able to learn from experience.

“You see a lot of ‘firsts’ in your life, but you appeal to past experience and you don’t freak out,” Pavone said. “It’s pretty hard to do that with a robot.”

New tools for new worlds

All that decision-making does no good, however, unless a robot can translate it into effective physical actions in unfamiliar environments – for instance, zero gravity.

That’s why Pavone’s lab has an air hockey table (or something close to one). Robot prototypes on its ultra-smooth granite surface shoot jets of air downwards and sideways, gliding over it like a puck. This way, Pavone’s lab can test how well the robots’ algorithms translate into lateral (though not vertical) motion in a zero-gravity-like environment.

To ensure robots can do their jobs once they get where they’re going, the ASL also works on gripping tools – such as microspines for hooking into irregular surfaces and a gecko-inspired adhesive co-developed with the Cutkosky lab. Technologies like these could enable robots like Astrobee, a free-floating assistant aboard the ISS, to move cargo, fetch tools, make repairs, and clear debris.

“An astronaut’s time is very valuable, but a significant amount of it is spent on housekeeping. Ideally, you would use a robot for that,” Pavone said, chuckling. “It’s fascinating work, but at the end of the day, we’re building robots to remove junk.”

But the ASL’s designs could also help with space exploration. Take ReachBot, one of Newdick’s main projects. Its eight arms extend like measuring tape with grippers at the ends. Paired with an autonomous navigation system, this could let it clamber around caves and tunnels on Mars without risking a human life to explore in person – but probably not anytime soon.

“If I’m really lucky, in 20 years, my work will inspire another robot that does something different, but related,” Newdick said. “But if some part of what you do can contribute to something bigger, then that’s the goal.”

Always more to explore

Deploying autonomous systems has its downsides – especially on Earth, where robots could potentially take some jobs currently held by humans.

“That’s always in the back of my mind,” Pavone said. “We develop systems that hopefully will create opportunities in the long run, but in the short term might cause significant pain for some segments of society.”

Pavone and his students collaborate with other schools across Stanford to understand those consequences and design different types of strategies to mitigate them – such as using AI to redistribute traffic toll money from drivers who can afford tolls to those who can’t.

But with space robots, not even the sky’s the limit. Pavone gives the example of Mars: 20 years ago, the goal was just to land a robot there. Now, we’ve sent helicopters to take aerial views of the surface, and with ReachBot, we’re hoping to look underground.

“You always want to get into more complicated environments,” Pavone said. Newdick agreed: Given the resources, she said, she’d love to design a robot to crawl down the vents in the icy crust of Enceladus, a moon of Saturn, to search for signs of life – another exploration likely impossible without autonomous robots.

“Learning where we came from, where the planets and stars came from – that’s always been a curiosity,” Newdick said. “We can learn a lot doing science on Earth, but we can learn more if we also look elsewhere.”